Why You Should Avoid Prioritization Frameworks and How To Do It Right

Prioritize based on objectives and strategy, not spreadsheets and formulas

Bonus Content: I gave a talk on ProductBeats on this topic: How to Prioritize. You can see the video here: https://productbeats.com/blog/episode142

I sometimes get asked about product/feature prioritization frameworks. In particular, which one(s) do I suggest people use.

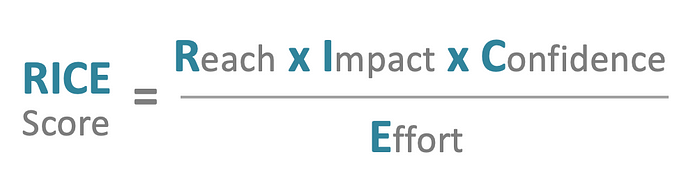

Before getting into the answer to that question, let’s define what we mean by prioritization frameworks. These are framework like RICE (Reach, Impact, Confidence, Effort), WSJF (Weighted Shorted Job First) etc. that are promoted as ways to help product and development teams decide how to prioritize features or work to be done.

Generally speaking, these frameworks require Product Managers or product teams to score each feature or initiative on the factors in the framework, then plug those scores into a formula to come to an overall score for each feature.

At that point you can rank your feature list etc. based on the prioritization scores, with highest scores being more important and prioritized first and lower scoring items done later.

It all works like magic; and if you have a nice spreadsheet, you can score hundreds of features in very little time. Sounds fool proof right?

I’ll be honest, I don’t recommend any of these types of frameworks because they actually lead you down a path of prioritization theatre. i.e. they look like they are helping, but they are not in most cases. Here’s why:

Don’t prioritize features

You shouldn’t be prioritizing “features”. Yes, I said that. Features are implementations that are linked to problems or opportunities. You should be focused on prioritizing problems and opportunities that are tied to higher level goals and strategies. You should not prioritize features.

Why do you have so many features to prioritize?

If you have so many “features” to implement that you need a framework and spreadsheet to prioritize them, then you have another problem that needs to be solved first. Where are all these features coming from? Why are you not able to reduce them by other means? This usually indicates a lack of strategy and understanding of real customer and market problems.

The frameworks often work on guesstimates

Frameworks like RICE, WSJF etc. have as inputs — “guesstimates” or qualitative assessments — that go into an equation.

e.g. RICE stands for Reach, Impact, Confidence, Effort. Each of those is a subjective assessment that is turned into a number and then used in a calculation.

There are several problems with this approach. The two main issues are:

- These are not ratio scale numbers that can be put into an equation

- The values defined and used are quite arbitrary and vary from team to team.

- What’s missing in those subjective assessments is the margin of error (MoE) associated with each factor. And there is nowhere in the calculation that includes that.

NOTE: I won’t elaborate on #1 here, but if you want to learn more about the meaning and implications of ratio scale numbers, you can do so here. It’s actually very important, and once you understand this, you’ll realize that these formulas are completely, mathematically invalid.

The Importance of Margin of Error

Why is Margin of Error important? Here’s the equation to calculate the RICE score.

Each factor (Confidence, Effort etc.) is clearly not an analytic value. It’s a subjective assessment, often defined as Low, Medium, High, or some other similar model. Those assessments are typically converted into some numerical values. eg. for Confidence, it might look like:

- 1.0 = high confidence

- 0.7 = medium confidence

- 0.5 = low confidence

NOTE: Those values are completely arbitrary and will vary from team to team. e.g. I’ve seen people use a scale of 1–10 while others might have 1.0, .5, .25 for high, medium, low.

EXPERIMENT: If you were to run an experiment, you’d see the problem first hand. Take a team and split it in two. Give each team the same list of “features” to “prioritize”.

Tell them to use RICE and make sure they understand what it is and how to use it. But let each team decide the values etc. they’ll use. Send them off to separate rooms (or Zoom Rooms) and then bring them back together after they are done and compare their top 10 (or so) priorities. I guarantee you they will be VERY different.

Now comes the fun part. Ask them to explain the values they used in RICE, and why they chose those (e.g. the values they used to define high, med, low confidence etc.) and also explain the values behind their top 3 ranked items. It will be eye opening, I guarantee it.

BTW, if the two teams come up with the same (or VERY similar — e.g. 7 out of 10 ranked the same) lists, that’s great. It means they were in very high alignment, and I’ll owe you a drink. But I doubt that will every happen.

Effort could be approximated by story points or some other approximation model. You see the issue right? There is margin of error (MoE) in each of these approximations.

If you actually include some meaningful MoE on each value, you end up with a LARGE total margin of error on the final results.

Why? Because when you multiply/divide factors each with MoE, the TOTAL MoE is the sum of the individual ones. So if there is a +/- 20% MoE on each factor, you get 80% for the resulting score when using RICE. i.e. +/- 20% times 4 factors. The more factors, the more potential margin of error.

If you look at a bunch of numbers with such huge margins of error, it’s impossible to use them in a meaningful way.

What’s the difference 55, 75 and 30? A lot.

What the difference between say, 55 +/- 80%, 75 +/- 80% and 30 +/- 80%? In reality, nothing. The MoE is really important.

The same holds true for WSJF. The formula is different, but the issues are the same. The Cost of Delay (Business Value, Time Criticality, Risk Reduction), and ESTIMATED Size all have significant error bars associated with them but are completely ignored in the calculation.

You cannot turn approximates into absolutes just by ignoring the uncertainty.

When you consider the lack of ratio scale numbers, and ignoring Margin of Error, these frameworks have the feeling of being analytical, but in reality are the exact opposite.

BTW, another problem with WSJF, is that it conflates priority with sequence. It says:

Weighted Shortest Job First (WSJF) is a prioritization model used to sequence work for maximum economic benefit.

Note that prioritization (what’s important) and sequencing (order of work or activity) are VERY different. Just because something is important, it doesn’t mean we do it first as there may be dependencies or other optimizations that must be understood.

e.g. A roof on a house is REALLY important, but we don’t and can’t build it first.

They promote bottom-up prioritization

These frameworks promote a bottom up mindset — i.e. prioritizing lists of features. In reality, prioritization starts with objectives and strategy and from there, market problems, user scenarios and use cases etc.

By the time you get down to features — i.e. implementation specifics — you’ve already done a LOT of prioritization. Any “features” that are important shouldn’t need some multi-factor calculation to prioritize.

That’s why I recommend everyone start with vision, objectives and strategies to narrow down focus and then use those as guide posts to decide on specific plans to implement.

One exception

There is one scenario where some of these frameworks can be helpful. I’ve used some of these frameworks — e.g. stack ranking, 2D (e.g. value vs. effort) prioritization — in discovery exercises with customers to help understand how THEY see value, impact etc.

The idea is NOT to use the customer statements to actually prioritize work, but to use these tools to elicit deeper discussions with them to understand WHY they see some things as important or high impact, or not important, low impact etc.

Ultimately, the job of Product Management is to get customer/market inputs/insights and marry that with internal goals to make the final prioritization decisions.

Those decisions should NOT be made using spreadsheets and large approximations.

P.S. And don’t even get me started about MoSCoW!!

A little feedback please

If you’ve read this far, thank you. I’d like some feedback on the article to make it better. Just 3 questions. Should take 30 seconds at the most, but will really be valuable to me. Thanks in advance.

===> Click here <===

Thank you.